Feed aggregator

You still need reliable and tested backups for your Oracle databases!

These days, I was reviewing and updating the “Oracle Backup and Recovery Workshop” from dbi services. It’s all about RMAN, a powerful tool that always impresses my students. But I must admit that this workshop is less popular than before. Today, backup of an Oracle database is not a hot topic anymore. First, nothing is really new regarding RMAN compared to a few years ago. Hardware reliability has also increased significantly, and data centers are more secured than ever. Both making failures less likely to happen. Another point is that Disaster Recovery has become a standard in most projects, meaning that for a lot of failures, enabling the standby database will solve the problem in a minute or two. Finally, Oracle-embedded flashback technologies bring you faster solutions compared to restoring a backup when database is not broken (these technologies are also part of the workshop). That said, it doesn’t mean that you don’t need a strong backup strategy. This is still your very last protection for dramatic scenarios. And you need to test it from time to time. Let’s review what’s important and how to check and test your backup strategy.

Why you still need a reliable backup at any moment?Let’s remind the main reasons for having a reliable backup:

- you may loose everything on your primary and secondary site

- you may have logically corrupted data from a software error or a human error, also replicated to your standby database

- you may want to recover partial data that flashback technologies cannot bring back

- you may have a security breach widely altering your data (corrupted storage, ransomware, aso)

For sure, this is rather unlikely to happen, but as a DBA, you’re supposed to have a plan in case of any kind of disaster. And a solid RMAN backup is your trustable ally.

Why you probably won’t use a RMAN restore in most cases?You probably have a Disaster Recovery (DR) setup, meaning 2 sites with database replication using Data Guard (for Enterprise Edition) or Dbvisit Standby (for Standard Edition 2). In most cases, if you need a quick solution for bringing back your database to life after a crash, you will failover to the DR database. It’s a couple of minutes, and if you test your DR database on a regular basis (I mean doing a switchover at least once a year), this operation will succeed without any doubt. A failover is adequate when your primary server is not responding or when your primary database has corrupted files. Doing a failover is always the best thing to do if you want minimum data loss.

If you need data loss, I mean if there is a data corruption due to an error (incorrect SQL commands, application bug), you will probably use the Oracle-embedded flashback technologies. Flashback relies on multiple different technologies for different purposes. If you drop a table, you can bring it back from the recycle bin (FLASHBACK TABLE TO BEFORE DROP), if you delete lines from a table, you can do a query in time (AS OF TIMESTAMP) for example. If the overall database needs to go back in time, and if you’re using Enterprise Edition, you will use FLASHBACK DATABASE. FLASHBACK DATABASE, once enabled, is a mechanism that generates flashback logs, a kind of “INCR -1” automatic backup. With this feature, you can go back at any point in time from a couple of minutes to several hours. It’s much faster than an equivalent RMAN restore. And you can do multiple flashback operations to find the desired Point In Time.

Restoring a backup will be done when nothing else is possible, just because it’s slower to put back older datafiles on disk from backupsets, and recover them.

Probably the most important: make sure RPO and RTO are aligned with the company strategyAs a DBA, you must ensure that these metrics, defined by the company, are met:

- Recovery Point Objective: until when you must be able to go back in time regarding data

- Recovery Time Objective: the maximum time you need to restore the database to any Point In Time within the RPO

You should consider these RPO/RTO as a contract between the DBA and the management. The DBA will require resources, like adequate infrastructure, disk capacity and licenses to reach these objectives.

Regarding RPO, it’s mainly a matter of storage capacity. For RTO, it involves considering database edition, number of licenses/cores and storage bandwidth.

You must test your backup on a regular basis, just to ensure that you still respect the RTO with an increasing amount of data months after months.

Too often, RPO and RTO are defined by the DBA himself. In other words, the DBA makes what’s possible with the resources he has. This is definitely not the best approach.

Check and test your backupsFirst, you should never trust your backup! Not because it’s not reliable, but because backup is done when the database is opened, meaning that backups are not consistent. Restore is only possible if a complete set of backups (full + incremental + archivelogs) is available, with an adequate controlfile. Green lights from your backup monitoring tool or the successful word at the end of your backup log is simply not enough. The only trustable backup strategy is the one you test on a regular basis.

These are the checks and tests I would do to make sure my backup is OK.

Check RMAN viewsWith this statement, you are able to guess the backup strategy and see if it works fine at a glance.

set lines 180

set pages 300

col min for 999.9

col status for a20

alter session set NLS_DATE_FORMAT="DD/MM-HH24:MI";

select start_time "Start", round (input_bytes/1024/1024,1) "Source MB", round(output_bytes/1024/1024) "Backup MB", input_type "Type", status "Status", round(elapsed_seconds/60,1) "Min", round(input_bytes/1024/1024/elapsed_seconds,1) "read MB/s", round(output_bytes/1024/1024/elapsed_seconds,1) "write MB/s", round(compression_ratio,1) RATIO from v$rman_backup_job_details where start_time >= SYSDATE-7 order by 1 desc;

Start Source MB Backup MB Type Status Min read MB/s write MB/s RATIO

-------------- ---------- ---------- ------------- ------------ ------ ---------- ---------- ----------

25/08-15:10:06 1443 380 ARCHIVELOG COMPLETED .7 36.1 9.5 3.8

25/08-14:10:06 123 50 ARCHIVELOG COMPLETED .1 20.6 8.3 2.5

25/08-13:10:05 33 27 ARCHIVELOG COMPLETED .1 6.5 5.4 1.2

25/08-12:10:05 32 27 ARCHIVELOG COMPLETED .1 8 6.8 1.2

25/08-11:10:05 46 31 ARCHIVELOG COMPLETED .1 7.7 5.1 1.5

25/08-10:10:05 44 31 ARCHIVELOG COMPLETED .1 7.4 5.1 1.4

25/08-09:10:04 31 27 ARCHIVELOG COMPLETED .1 5.1 4.5 1.1

25/08-08:10:05 32 27 ARCHIVELOG COMPLETED .1 8 6.8 1.2

25/08-07:10:05 30 26 ARCHIVELOG COMPLETED .1 4.9 4.4 1.1

25/08-06:10:05 30 26 ARCHIVELOG COMPLETED .1 5.9 5.3 1.1

25/08-05:10:04 30 27 ARCHIVELOG COMPLETED .1 5 4.4 1.1

25/08-04:10:05 32 27 ARCHIVELOG COMPLETED .1 8 6.8 1.2

25/08-03:10:05 38 29 ARCHIVELOG COMPLETED .1 6.3 4.9 1.3

25/08-02:10:05 30 27 ARCHIVELOG COMPLETED .1 5 4.4 1.1

25/08-01:10:05 30 26 ARCHIVELOG COMPLETED .1 5.9 5.3 1.1

25/08-00:10:05 39 30 ARCHIVELOG COMPLETED .1 7.8 6 1.3

24/08-22:30:06 133858 17371 DB INCR COMPLETED 26.3 84.8 11 7.7

24/08-22:10:05 1238 376 ARCHIVELOG COMPLETED .5 41.3 12.5 3.3

24/08-21:10:05 29 26 ARCHIVELOG COMPLETED .1 7.2 6.6 1.1

24/08-20:10:05 29 26 ARCHIVELOG COMPLETED .1 4.1 3.8 1.1

24/08-19:10:05 34 28 ARCHIVELOG COMPLETED .1 5.6 4.6 1.2

24/08-18:10:05 39 29 ARCHIVELOG COMPLETED .1 7.8 5.8 1.3

24/08-17:10:05 1037 204 ARCHIVELOG COMPLETED .5 35.8 7 5.1

24/08-16:10:05 42 31 ARCHIVELOG COMPLETED .1 6 4.4 1.4

...

In this example, everything is fine (Status=COMPLETED), a full (INCR level 0) is done every night, archivelog backup is done every hour and compression is enabled. Here, read MB/s and write MB/s give you estimated values. If you use incremental backups (INCR level 1), you can identify them with the “Backup MB” column: the figure should be much lower than the one of an INCR level 0.

Having a catalog is definitely more convenient as you can get an overview for all your databases from a unique statement:

set lines 180

set pages 300

col min for 999.9

col status for a20

alter session set NLS_DATE_FORMAT="DD/MM-HH24:MI:SS";

select db_name, start_time "Start", round (input_bytes/1024/1024,1) "Source MB", round(output_bytes/1024/1024,1) "Backup MB", input_type "Type", status "Status", round(elapsed_seconds/60,1) "Min", round(input_bytes/1024/1024/elapsed_seconds,1) "read MB/s", round(output_bytes/1024/1024/elapsed_seconds,1) "write MB/s", round(compression_ratio,1) RATIO from rc_rman_backup_job_details where start_time >= SYSDATE-7 order by 1,2 desc;

...But this query is not enough to make sure that you can restore your database.

Check if retention is the one you expectIt’s not rare that a DBA is surprised when he checks backup retention and discovers a much lower value than expected. Retention can be changed in a minute, often for solving storage capacity issues. This could have dramatic consequences if you miss the RPO your boss sign up for. But most probably, nobody will know about that…

rman target /

show RETENTION POLICY ;

RMAN configuration parameters for database with db_unique_name ROP_S1 are:

CONFIGURE RETENTION POLICY TO RECOVERY WINDOW OF 7 DAYS;With this retention, you should be able to restore the database at a consistent state between now and 7 days before. It implies keeping backupsets of database for more than 7 days, because you need the FULL or INCR 0 backup before 7 days to reach the oldest Point In Time. And for sure, all the INCR 1 and archivelog backups done after this FULL or INCR 0.

But checking the retention is not enough to make sure you have everything needed for a restore.

Check if backups are known by RMANWhen deleting backups, this retention can be overridden if needed. So there is no guarantee that backups are still in the catalog. You should be able to find backups within this retention, backups of database (FULL or INCR 0) but also backups of archivelogs and INCR 1:

LIST BACKUP OF DATABASE COMPLETED BETWEEN 'sysdate -7' AND 'sysdate';

...

LIST BACKUP OF ARCHIVELOG COMPLETED BETWEEN 'sysdate -7' AND 'sysdate';

...This is pretty verbose but you will find the various backusets and what’s inside.

Unfortunately, this is still not enough to make sure you can restore your database.

Do a crosscheck to validate that backup pieces are availableLIST BACKUP is only having a look at backup references in the catalog, it doesn’t guarantee that backupset files (backup pieces) are available. Therefore, a crosscheck is needed to match references with files on disk:

CROSSCHECK BACKUPSET;

You can do crosscheck only on a subset of backups, for example the most recents:

CROSSCHECK BACKUPSET COMPLETED AFTER 'sysdate -1';

...Or backups between 2 dates:

CROSSCHECK BACKUPSET COMPLETED BETWEEN 'sysdate -7' AND 'sysdate -6';

...All backupsets must be AVAILABLE. If not, they are EXPIRED, meaning not on disk anymore. This is not a normal behavior.

Is it enough for making sure you can restore? Not at all, it doesn’t mean the restore is possible.

Do a restore database validate with and without a Point In TimeWithout any downtime and disk usage, you can do a RESTORE DATABASE VALIDATE. It will read backupsets from the backup location and validate that these backupsets are OK to restore the datafiles.

run {

ALLOCATE CHANNEL C1 DEVICE TYPE DISK;

ALLOCATE CHANNEL C2 DEVICE TYPE DISK;

ALLOCATE CHANNEL C3 DEVICE TYPE DISK;

RESTORE DATABASE VALIDATE;

}This RESTORE DATABASE VALIDATE is also possible with a Point In Time. RMAN is then able to simulate a full restore of the adequate backupsets within the rentention.

run {

SET UNTIL TIME "TO_DATE('10-OCT-2025 14:00:00','DD-MON-YYYY HH24:MI:SS')";

ALLOCATE CHANNEL C1 DEVICE TYPE DISK;

ALLOCATE CHANNEL C2 DEVICE TYPE DISK;

ALLOCATE CHANNEL C3 DEVICE TYPE DISK;

RESTORE DATABASE VALIDATE;

}Note that it restores the datafiles to /dev/null, meaning nowhere. This is why a RECOVER DATABASE VALIDATE does not exist, it’s the main limit. You will be able to restore the database, but you might not be able to recover the datafiles and open the database.

This test is nice, but not good enough. The recover is mandatory to open the database.

Do a restore AND recover on another server with or without a Point In TimeThe best test would be a complete restore/recover on another server. Considering this server has the same system settings, the same Oracle version and the same filesystems, it’s rather easy. First, identify the latest controlfile and spfile autobackup, then restore the spfile and the controlfile from this backupset. RMAN is able to start an instance without spfile, for the only purpose of restoring the spfile:

su - oracle

echo "BMC3PRD:/u02/app/oracle/product/19.0.0.0/dbhome_1:Y" >> /etc/oratab

ls -lrt /backup/BMC3PRD/ | tail -n 1

-rw-r-----. 1 oracle oinstall 19136512 Sep 4 09:20 o1_mf_s_1156169494_lr8gd9h4_.bkp

. oraenv <<< BMC3PRD

rman target /

startup nomount;

startup failed: ORA-01078: failure in processing system parameters

LRM-00109: could not open parameter file '/u02/app/oracle/product/19.0.0.0/dbhome_1/dbs/initBMC3PRD.ora'

starting Oracle instance without parameter file for retrieval of spfile

Oracle instance started

Total System Global Area 1070428224 bytes

Fixed Size 8904768 bytes

Variable Size 281018368 bytes

Database Buffers 771751936 bytes

Redo Buffers 8753152 bytes

restore spfile from '/backup/BMC3PRD/o1_mf_s_1156169494_lr8gd9h4_.bkp';

Starting restore at 04-SEP-25

using target database control file instead of recovery catalog

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=433 device type=DISK

channel ORA_DISK_1: restoring spfile from AUTOBACKUP /backup/BMC3PRD/o1_mf_s_1156169494_lr8gd9h4_.bkp

channel ORA_DISK_1: SPFILE restore from AUTOBACKUP complete

Finished restore at 04-SEP-25

startup force nomount;

restore controlfile from '/backup/BMC3PRD/o1_mf_s_1156169494_lr8gd9h4_.bkp';

Starting restore at 04-SEP-25

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=26 device type=DISK

channel ORA_DISK_1: restoring control file

channel ORA_DISK_1: restore complete, elapsed time: 00:00:05

output file name=+RECO/BMC3PRD_CR/CONTROLFILE/current.486.1210937913

Finished restore at 04-SEP-25

startup force mount;

run {

ALLOCATE CHANNEL C1 DEVICE TYPE DISK;

ALLOCATE CHANNEL C2 DEVICE TYPE DISK;

ALLOCATE CHANNEL C3 DEVICE TYPE DISK;

ALLOCATE CHANNEL C4 DEVICE TYPE DISK;

RESTORE DATABASE ;

RECOVER DATABASE ;

ALTER DATABASE OPEN RESETLOGS;

}

...

Testing a restore/recover at a random Point In Time within your retention window is also quite easy: identify the autobackup of spfile and controlfile corresponding to the first one after your Point in Time target, and specify an UNTIL clause in your restore/recover database block:

rman target /

startup nomount;

startup failed: ORA-01078: failure in processing system parameters

LRM-00109: could not open parameter file '/u02/app/oracle/product/19.0.0.0/dbhome_1/dbs/initBMC3PRD.ora'

starting Oracle instance without parameter file for retrieval of spfile

Oracle instance started

Total System Global Area 1070428224 bytes

Fixed Size 8904768 bytes

Variable Size 281018368 bytes

Database Buffers 771751936 bytes

Redo Buffers 8753152 bytes

restore spfile from '/backup/BMC3PRD/o1_mf_s_1156169494_124efa11_.bkp';

...

startup force nomount;

restore controlfile from '/backup/BMC3PRD/o1_mf_s_1156169494_124efa11_.bkp';

...

startup force mount;

run {

SET UNTIL TIME "TO_DATE('10-SEP-2025 09:27:42','DD-MON-YYYY HH24:MI:SS')";

ALLOCATE CHANNEL C1 DEVICE TYPE DISK;

ALLOCATE CHANNEL C2 DEVICE TYPE DISK;

ALLOCATE CHANNEL C3 DEVICE TYPE DISK;

ALLOCATE CHANNEL C4 DEVICE TYPE DISK;

RESTORE DATABASE ;

RECOVER DATABASE ;

ALTER DATABASE OPEN RESETLOGS;

}

...This time, this test is enough to make sure your backups are OK, because you just did the exact same procedure as if you were facing a real disaster case.

Duplicate from backup with or without a Point In TimeYou may not want to restore the database with the same name than the one used for production database, for obvious reasons. You can then restore with a different name thanks to the DUPLICATE command. This command is used for duplicating a target (source) to an auxiliary database (destination), but you can use it without any target, only pointing to a folder containing the backupsets. Starting the instance without a spfile is still possible with RMAN, but you’ll need to connect using target keyword first:

echo "RESTO:/u02/app/oracle/product/19.0.0.0/dbhome_1:Y" >> /etc/oratab

. oraenv <<< RESTO

rman target /

startup nomount;

exit;

rman auxiliary /

run {

ALLOCATE AUXILIARY CHANNEL C1 DEVICE TYPE DISK;

ALLOCATE AUXILIARY CHANNEL C2 DEVICE TYPE DISK;

ALLOCATE AUXILIARY CHANNEL C3 DEVICE TYPE DISK;

ALLOCATE AUXILIARY CHANNEL C4 DEVICE TYPE DISK;

DUPLICATE DATABASE TO RESTO BACKUP LOCATION '/backup/BMC3PRD/' NOFILENAMECHECK;

}

It also works with a Point In Time:

rman auxiliary /

run {

SET UNTIL TIME "TO_DATE('10-OCT-2025 16:30:00','DD-MON-YYYY HH24:MI:SS')";

ALLOCATE AUXILIARY CHANNEL C1 DEVICE TYPE DISK;

ALLOCATE AUXILIARY CHANNEL C2 DEVICE TYPE DISK;

ALLOCATE AUXILIARY CHANNEL C3 DEVICE TYPE DISK;

ALLOCATE AUXILIARY CHANNEL C4 DEVICE TYPE DISK;

DUPLICATE DATABASE TO RESTO BACKUP LOCATION '/backup/BMC3PRD/' NOFILENAMECHECK;

}All these commands are fine when using OMF: I would recommend using OMF everywhere. If you don’t use OMF, you will need to take care of file names and use path conversions within RMAN commands.

Please, never do a DUPLICATE on a server where a database with the same name as the source database exists: at some point, RMAN needs to restore the controlfile with the name of the source database before changing for the new name, it can be hazardous…

This test is as good as the previous one: it can definitely validate your backups as if you were restoring your production database.

ConclusionI would summarize this blog post with these 3 things:

- you still need a strong backup strategy: do not underestimate the high importance your backups still have

- RPO and RTO are decided by your company and the DBA asks for resources allowing him to reach these targets. He must warn if resources cannot guarantee these RPO/RTO

- do regular and extensive checks of your backups, and simulate a disaster scenario at least once a year: this is good for validating RPO/RTO and good for validating the reliability of your backup strategy. This is also good for your training as you normally won’t do regular restore/recover operations

L’article You still need reliable and tested backups for your Oracle databases! est apparu en premier sur dbi Blog.

Joel Kallman Day 2025 : It’s a Wrap! (#JoelKallmanDay)

Yesterday was an Oracle community day called the Joel Kallman Day (#JoelKallmanDay) 2025. I would like to say a big thank you to everyone who took the time to join in. Here is the list of posts I saw. If I missed you out, give me a shout and I’ll add you. Along the way I … Continue reading "Joel Kallman Day 2025 : It’s a Wrap! (#JoelKallmanDay)"

The post Joel Kallman Day 2025 : It’s a Wrap! (#JoelKallmanDay) first appeared on The ORACLE-BASE Blog.Joel Kallman Day 2025 : It’s a Wrap! (#JoelKallmanDay) was first posted on October 16, 2025 at 6:47 am.©2024 "The ORACLE-BASE Blog". Use of this feed is for personal non-commercial use only. If you are not reading this article in your feed reader, then the site is guilty of copyright infringement. Please contact me at timseanhall@gmail.com

APEX IR Subscriptions #JoelKallmanDay

Interactive Reports allow to send their content per e-mail on a regular basis thanks to the subscription feature. But this can also raise some security concerns. Let’s see how to bring some kind of control into the game…

RequirementsWe developed some reporting solution for an Insurance company based on #orclAPEX. Some of the reports need to be sent on a regular basis, as Excel, to people like executive managers and that’s where Interactive Reports Subscription comes into the game.

As you can imagine, such reports may contain confidential data which should not be sent outside of the company (at least not by the reporting application itself).

There are many ways to control the e-mail flows, but in some cases it takes time to implement changes in company global rules. So, let’s start with small changes until the bigger ones can be implemented…

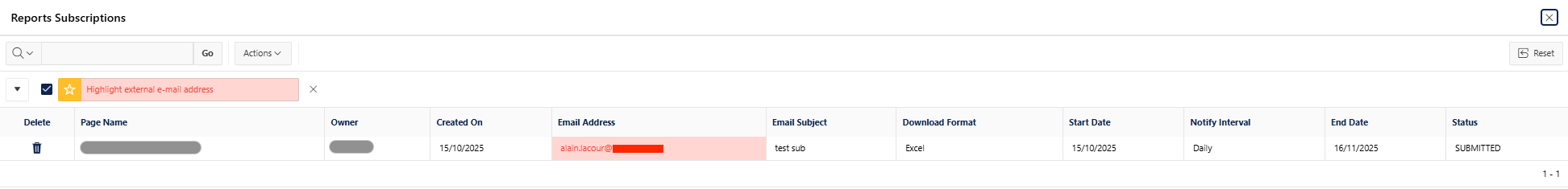

The need is to let the application Administrators see all IR subscriptions, identify the ones with a target e-mail address outside of the company and delete them.

ImplementationPrior to use of subscription, e-mail server must be configured at APEX instance level.

First we need to build the query of the report. To do so, let’s identify the APEX views which can provide the required information about the IR subscription within the APEX_DICTIONARY.

We are looking for information within the application, so view name will start by

APEX_APPLICATION_…

The reports are located in application pages, so view name will add as following APEX_APPLICATION_PAGE_…

Within the page we are looking for the Interactive Report

APEX_APPLICATION_PAGE_IR_…

And for the Interactive Report, Subscription definition is required: APEX_APPLICATION_PAGE_IR_SUB

The structure of the reports pages in scope is basic and contains only one Interactive Report. The name of the page is enough to identify the report in the list, so the APEX_APPLICATION_PAGES view needs to be joined.

There is only one workspace, so there is no need to identify the workspace, but only the application.

The query looks as following:

SELECT ap.page_id,

ap.page_name,

apis.notify_id,

apis.owner,

apis.email_address,

apis.email_subject,

apis.start_date,

apis.notify_internal,

apis.end_date,

apis.download_format,

apis.created_on,

apis.status

FROM apex_application_pages ap, apex_application_page_ir_sub apis

WHERE ap.application_id = v('APP_ID')

AND ap.application_id = apis.application_id

AND ap.page_id = apis.page_id

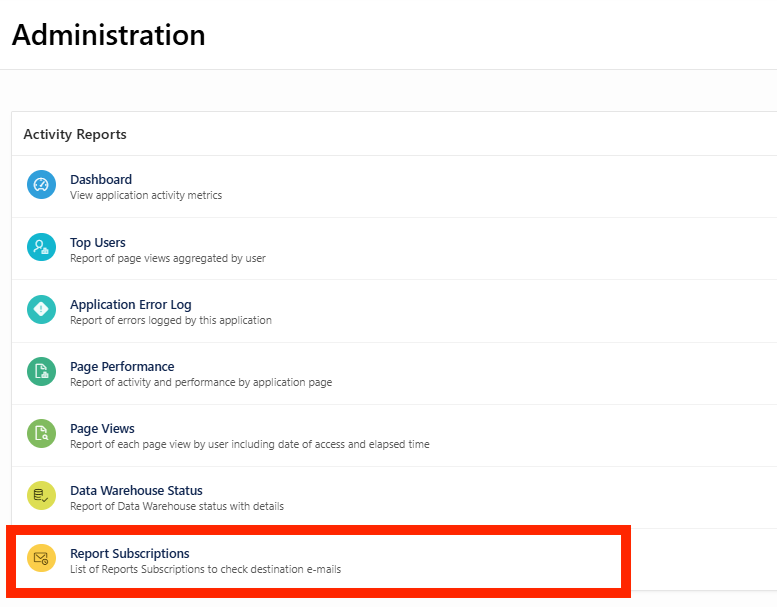

The best place to add the report in the application, is the standard administration reports provided by APEX and already in use:

The report output is an Interactive Report in a Modal Dialog like the other Administration reports. We use field highlighting to identify e-mail addresses outside of company domain …

Let’s check for a blog within the community to see how to add the delete column:

https://vinish.dev/create-delete-row-button-for-interactive-report-in-oracle-apex

The deletion procedure is DELETE_SUBSCRIPTION given by the APEX_IR PL/SQL API package. The required subscription_id parameter is given by the notify_id field in the apex_application_page_ir_sub view.

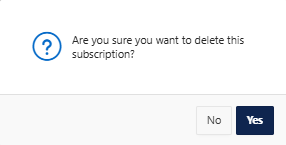

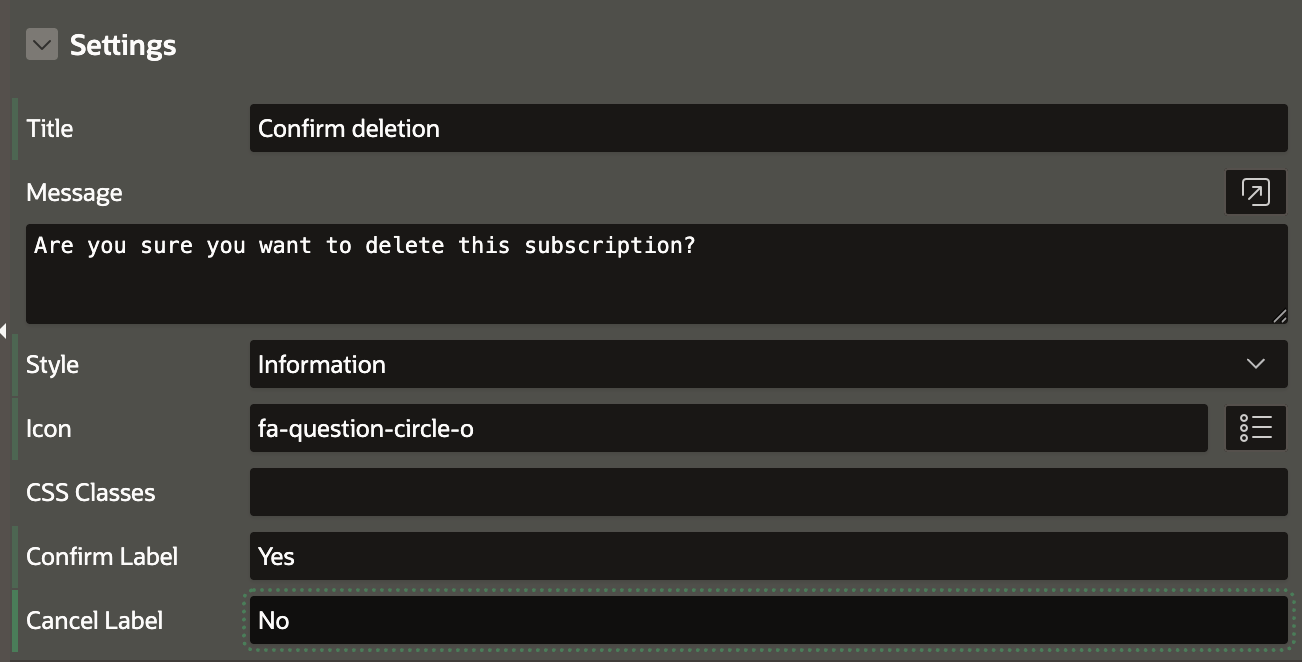

And the JavaScript Confirmation message looks like this:

thanks to following parameters:

With some basic add-on, based on APEX views and PL/SQL APIs it is easy to provide monitoring and control upon Interactive Report Subscriptions within an application.

I hope this helps you to start with such requirements…

… enjoy APEX development

L’article APEX IR Subscriptions #JoelKallmanDay est apparu en premier sur dbi Blog.

Oracle APEX, OCI, Ollama at work thanks to OllAPEX for the #JoelKallmanDay

Today is the day dedicated to the late Joel Kallman, a mentor and friend, so I thought it would be nice to show off my APEX application hosted on OCI working with LLMs run via the Ollama server located at home.

It's an interesting exercise because one learns a lot about how these "things" work, the strong and weak points of each model, which one works best for a certain task, which one has the best "vision" capability, which one creates the best embeddings.

So, here are a few short videos showing some "vibe" coding that I'll need to revise tomorrow, the analysis of two versions of the same procedure created by the same LLM, then a "live" comparison showing how other models answer the same question differently.

So far, so good.

#JoelKallmanDay #orclAPEX, #AI

Oracle AI Database 26ai Released

If you were on the internet yesterday you will have seen that Oracle announced Oracle AI Database 26ai at this year’s Oracle AI World. Mike Dietrich wrote about it here, which should answer some of the obvious questions. Here are some comments. Installations The first thing I did was download the Oracle AI Database 26ai … Continue reading "Oracle AI Database 26ai Released"

The post Oracle AI Database 26ai Released first appeared on The ORACLE-BASE Blog.Oracle AI Database 26ai Released was first posted on October 15, 2025 at 9:23 am.©2024 "The ORACLE-BASE Blog". Use of this feed is for personal non-commercial use only. If you are not reading this article in your feed reader, then the site is guilty of copyright infringement. Please contact me at timseanhall@gmail.com

Thinking about community #JoelKallmanDay

Several recent events have made me reflect about community, so I thought it might be worth writing about it for #JoelKallmanDay. Creators Communities require people who actually produce something. In the case of the Oracle community that includes bloggers, YouTubers and people who help answer questions on the internet. That’s not an all encompassing list, … Continue reading "Thinking about community #JoelKallmanDay"

The post Thinking about community #JoelKallmanDay first appeared on The ORACLE-BASE Blog.Thinking about community #JoelKallmanDay was first posted on October 15, 2025 at 8:00 am.©2024 "The ORACLE-BASE Blog". Use of this feed is for personal non-commercial use only. If you are not reading this article in your feed reader, then the site is guilty of copyright infringement. Please contact me at timseanhall@gmail.com

Fixing data faux pas with flashback table #JoelKallmanDay

Ever get that sinking feeling as you realise the change you made to your critical reference data a few minutes ago hasn’t done quite what you intended ?

If you’re running on Oracle, you may be in luck.

The Flashback Table command is perfect for those occasions when IQ is measured in milligrams of caffeine…

I must confess that I can’t hear the word “flashback” without immediately thinking of Leee John’s falsetto vocal from Imagination’s 1982

Disco classic of the same name. This goes some way to explaining the example that follows…

create table eighties_hits

(

artist varchar2(100),

track varchar2(100),

created_ts timestamp default systimestamp

)

/

alter table eighties_hits enable row movement;

I’ve included a column to hold the row creation timestamp purely to make the examples that follow a bit simpler.

Now let’s populate the table :

insert into eighties_hits( artist, track)

values('Cher', 'If I Could Turn Back Time');

insert into eighties_hits( artist, track)

values('Huey Lewis and the News', 'Back In Time');

insert into eighties_hits( artist, track)

values('Imagination', 'Flashback');

commit;Later on, we insert another row…

insert into eighties_hits( artist, track)

values('AC/DC', 'Thunderstruck');

commit;

select *

from eighties_hits

order by created_ts

/

ARTIST TRACK CREATED_TS

------------------------- ------------------------------ ----------------------------

Cher If I Could Turn Back Time 14-OCT-25 17.40.42.318599000

Huey Lewis and the News Back In Time 14-OCT-25 17.40.42.358932000

Imagination Flashback 14-OCT-25 17.40.42.399394000

AC/DC Thunderstruck 14-OCT-25 17.52.38.326151000

Some later, realisation dawns that AC/DC didn’t release Thunderstruck until 1990. We need to get our table back to how it was

before that last change.

Of course in this simple example, we’d just need to delete the last record, but to do so would be to pass up the perfect opportunity to run :

flashback table eighties_hits to timestamp (to_timestamp( '2025-10-14 17:45', 'YYYY-MM-DD HH24:MI'))

…and it’s like that last DML statement never happened :

select *

from eighties_hits

order by created_ts

/

ARTIST TRACK CREATED_TS

------------------------ ------------------------------ -----------------------------

Cher If I Could Turn Back Time 14-OCT-25 17.40.42.318599000

Huey Lewis and the News Back In Time 14-OCT-25 17.40.42.358932000

Imagination Flashback 14-OCT-25 17.40.42.399394000

Note that the flashback operation behaves in a similar way to a DDL statement in that the transaction cannot be rolled back.

Incidentally, you can also use an SCN in the command. To find the scn for a timestamp, you can use the timestamp_to_scn function :

select timestamp_to_scn( systimestamp)

from dual;There is a time limit on how long you can wait to correct issues in this way.

To find out how long you have :

select default_value, value, description

from gv$parameter

where name = 'undo_retention';

DEFAULT_VALUE VALUE DESCRIPTION

--------------- --------------- ---------------------------------------

900 604800 undo retention in seconds

The default is a mere 900 seconds ( 15 minutes). However, in this environment ( OCI Free Tier) it’s a healthy 168 hours ( about a week).

Undropping a tableIf you’ve really had a bit of a ‘mare …

drop table eighties_hits;

Then flashback table can again come to your rescue.

This time though, undo_retention is not relevant as drop table doesn’t generate any undo. Instead, you need to check to see if the table you want

to retrieve is still in the recyclebin :

select *

from recyclebin

where original_name = 'EIGHTIES_HITS';

If you can see it then, you’re in luck :

flashback table eighties_hits to before drop;

Note that any ancillary objects (e.g. indexes, triggers) will be restored but will retain their recycle bin names.

ReferencesThe Oracle Documentation for Flashback Table is typically comprehensive.

Licensing – Flashback Table is an Enterprise Edition feature .

You can find more about the Recyclebin here.

Just in case you’re wondering, Oracle Base can tell you about Joel Kallman Day .

Using Oracle API for MongoDB on OCI

If you’ve ever had to deal with MongoDB after years (or even decades) of working with SQL, you’ve probably realized that querying JSON documents can sometimes be painful, to say the least.

In response to this, Oracle (and others) use a not-so-native adaptation of SQL to query JSON documents. And if you’ve ever had to deal with these SQL-based JSON queries, you may have found yourself thinking (in hindsight) that querying JSON documents with MQL (MongoDB Query Language) wasn’t that painful after all. And rightly so : JSON documents are very different from relational tables, and there is no easy way to continue using SQL without learning some new syntax.

Oracle likely came to the same conclusion, and offers an API dedicated to MongoDB natives. The idea is simple : to ease the work of developers and database administrators when deciding to migrate from MongoDB to Oracle (and the other way around ?…)

On Oracle Cloud Infrastructure (OCI), this means being able to connect to Autonomous Databases (ADB). As a reminder, you have two of them included in the Always Free Tier of OCI, so you can play around with this feature for free before deciding to migrate to the cloud.

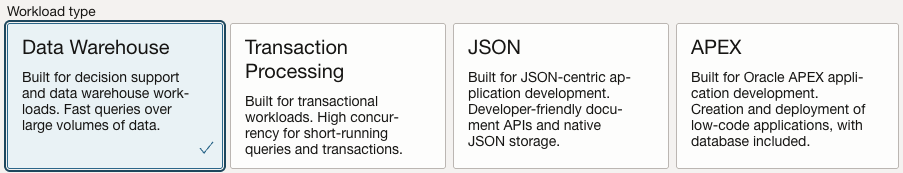

Setting up the Oracle MongoDB API on OCIWhen creating an Autonomous Database, you can decide on multiple workloads types, including a JSON workload. However, this workload type isn’t strictly required for the MongoDB API to work.

However, the network access setting of your Autonomous Database must be non-default with one of the following options enabled :

- Secure access from allowed IPs and VCNs only

- Private endpoint access only

For instance, when using the Secure access from allowed IPs and VCNs only option, you can add any IP address to the Access Control List (ACL), including your own.

Warning : If your IP address changes, you will have to update the ACL !

Warning : If your IP address changes, you will have to update the ACL !

Once the ADB (Autonomous Database) is created, you can check in the Tool configuration tab whether the MongoDB API is enabled (it should be, by default).

Then you can go in Database actions > Database Users to either create a user or modify an existing one, with the following privileges : CREATE SESSION, CREATE RESOURCE (default), and the SODA_APP role. After creation, you should enable REST as shown below. This will allow the API to work on that particular schema.

Back on the Tool configuration tab of your ADB instance, you’ll find the Public access URL. Copy it, this will be your connection string when using the API.

Connection String Example

Connection String Example

For this to work, you have to replace the user and password in the connection string. For instance, if your user is called USER_DB, and your password is userpwd, then you would use this connection string (without the brackets). Make sure to escape any special character in the password when doing so (see documentation).

mongodb://user_db:userpwd@A49E7CHE9B320E6-PCCVX8PAE2NZPVBQ.adb.eu-zurich-1.oraclecloudapps.com:27017/user_db?authMechanism=PLAIN&authSource=$external&ssl=true&retryWrites=false&loadBalanced=true'Use this connection string with mongosh or any tool provided by MongoDB. With mongosh, you would connect with this command :

mongosh 'mongodb://user_db:userpwd@A49E7CHE9B320E6-PCCVX8PAE2NZPVBQ.adb.eu-zurich-1.oraclecloudapps.com:27017/adb_admin?authMechanism=PLAIN&authSource=$external&ssl=true&retryWrites=false&loadBalanced=true'You are now connected to the Oracle ADB instance through the mongosh utility.

If you connect to an existing schema with tables, you will notice that running show collections (or show tables) doesn’t return anything. In Oracle 19c, only collections/tables created through the API appear in this list. Standard tables create with a CREATE TABLE SQL statement won’t be displayed.

Once connected, you can query the different databases (schemas, in Oracle terms) of your instance. However, unlike in MongoDB, you can’t administer the instance through mongosh.

You can look at the documentation to check whether the features you’re interested in are included in the API, but here is an extract of the most notable changes :

- All the most basic aggregated pipelines are available in the API, but more complex ones like

$currentOpor$mergeare not yet supported. - Other aggregation operators, like

$bucket,$documentsor$sampleare only available starting with Oracle 23ai. db.dropDatabase()doesn’t drop the Oracle schema. In fact, it doesn’t even deletes everything in the schema. It only deletes all real collections inside of it. So if you have a traditional table that is not a considered as a collection (not shown inshow collections), it will not be deleted by this command.

MongoServerError: Database connection unavailable

You might encounter the following error :

MongoServerError: Database connection unavailable. Ensure that the user exists and the schema is enabled for use with Oracle REST Data Services. A schema can be enabled by calling the PL/SQL procedure ORDS.ENABLE_SCHEMAIf you see this message, you can either follow the procedure or remember to enable REST directly on the OCI Database Actions panel, as shown above.

In short, Oracle’s MongoDB API provides an interesting bridge between the relational and document worlds. Even though some MongoDB features are supported yet, the API offers a straightforward way to connect MongoDB and Oracle Autonomous Database, making it ideal for testing, migration or even hybrid setups. For developers used to MQL, it can significantly ease the transition to Oracle’s ecosystem.

L’article Using Oracle API for MongoDB on OCI est apparu en premier sur dbi Blog.

Oracle EBS CVE-2025-61884 Patches Made Available After Initial Release

Oracle released an out-of-cycle security alert, CVE-2025-61884, on Saturday, October 11, 2025, and provided My Oracle Support (MOS) Note ID 3107176.1 with generic instructions on securing Oracle E-Business Suite (EBS). On Sunday night, Oracle updated the MOS Note with two patches to address the two security vulnerabilities that comprise the publicly disclosed exploit impacting Oracle EBS.